AREA15: Research Project

.jpeg)

Overview

AREA15 is an immersive entertainment venue in Las Vegas. As part of our project for them, we did extensive research to find an engaging interaction method for a mobile AR game other than a touchscreen interface.

Interaction Method Is Key to Engaging Experiences for Mobile AR

Interaction methods in augmented reality experiences can make or break the game for players. But there's a lot to consider when choosing which interaction method to implement and how to deliver the experience to the users (i.e., via a headset like Magic Leap or AR-enabled smartphone). We embarked on a research journey to find an interaction method for mobile AR other than a touchscreen. During this journey, we reviewed relevant controllers and methods available in 2020 and arrived at many interesting conclusions.

Exploring Interaction Methods for a Mobile AR Game

Litho

Litho is a small mobile AR controller. With a futuristic look, Litho fit well into the sci-fi atmosphere of AREA15.

Setting up the SDK was straightforward, and Litho needed minimal initial calibration. The interaction works by using a precise virtual laser pointer with a fixed origin. With 3 DOF readings, Litho can detect a hand’s orientation in space. But it can’t determine where it’s located. This was a major drawback, and we had to drop Litho.

On the flip side, Litho was immune to lighting conditions (which are poor at the venue; it’s dark and dim). And even though we didn’t go with Litho it could work well in any kind of mobile AR application where you need precision.

FinchDash / FinchShift

FinchDash and FinchShift are two AR/VR motion controllers. We used FinchDash in our research, because FinchShift doesn’t support mobile platforms.

FinchDash is a rather stripped down version of FinchShift because it only has 3 DOF tracking (compared with full 6 DOF input present in FinchShift).

ManoMotion

ManoMotion is a camera-based hand-tracking SDK for mobile. ManoMotion supports Unity and doesn’t require additional calibration. It’s easy to set up. Plus, ManoMotion can scale from 2D tracking and 3D tracking of the hand position to full-body skeletal tracking with gesture recognition.

But ManoMotion, unfortunately, has a few technical limitations (common to all camera-based solutions for mobile AR). For example, the effective control area in ManoMotion is limited to the camera's field of view.

ManoMotion also relies on computer vision algorithms. In our case, this caused inaccuracies when lighting conditions changed frequently. The experience degraded in colored and dim lighting.

We reached out to ManoMotion, and the company adjusted their algorithm to account for colored or dim lighting present in our project. After the adjustment, the SDK worked well. ManoMotion is a promising and impressive SDK for mobile AR.

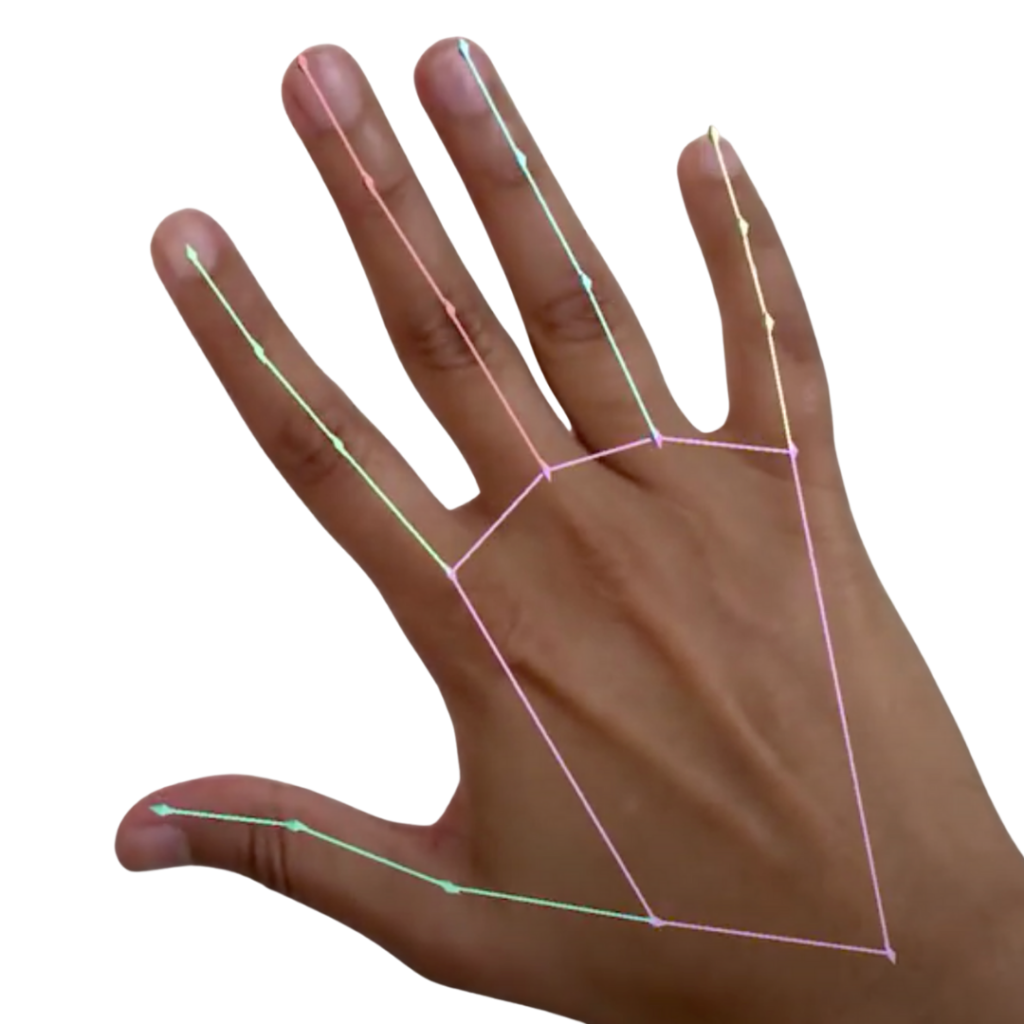

Google MediaPipe Hand Tracking

Google MediaPipe is another camera-based hand-tracking SDK, and so it has the same limitations in terms of effective control area and challenging lighting conditions.

Polhemus

Polhemus is a wearable hand-tracking solution that doesn’t rely on a smartphone’s camera, which makes it a promising option. Polhemus uses 6 DOF sensors and provides continuous tracking in complete darkness and with an unlimited field of vision and.

Xsens DOT

Xsens DOT is a motion-tracking device with 3 DOF support, which gives accurate orientation data; however, the position is only an estimate. For our purposes, it didn’t turn out to be practical. But it could be useful in cases where more precise and specific motion tracking is required.

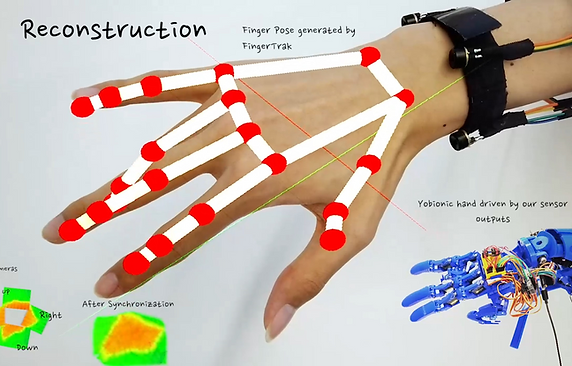

FingerTrak

FingerTrak is a hand tracking device enclosed in a bracelet. It uses thermal cameras attached to a wristband to detect gestures. Because it doesn’t rely on cameras, FingerTrak isn’t limited by a narrow field of view. As of 2022, the solution looks more like a prototype than a finished product. But it’s interesting how the device will evolve in the future.

Leap Motion Controller

A relatively small sensor with cameras and skeletal tracking, Leap Motion Controller accurately detects a user’s hands. But we didn’t get to fully test the device because it doesn’t support mobile.

Apple AirPods Pro

Yes, you read that right. Someone tried to use AirPods Pro for 6 DOF tracking. Interestingly enough, the experiment proved that AirPods can offer more functionality than just playing audio.

What were the requirements for the interaction method

Works well in poor lighting conditions

This was one of the biggest requirements as the venue was rather dark inside. Every camera-based solution will be somewhat impacted by this, depending on their algorithms.

Reads hand motions accurately

The experiences we were creating for AREA15 required precise hand movement tracking. The lower the accuracy the higher the risk of spoiling the experience for users.

Doesn't require additional hardware

The AR game is delivered via a mobile phone enclosed in a special mask. Adding another device would create too much of a burden for users to enjoy the experience. Plus, there are costs of maintenance to keep in mind.