What Mobile Augmented Reality App Interaction Methods Are There?

Litho

Litho is a very promising mobile AR controller. The device is small with a futuristic look, which matched really well the Sci-Fi theme of our project.

The initial research showed great potential, and, since we developed our app in Unity and Litho offers an SDK for it, we decided to take it to round two of our evaluation.

Our colleague Dominik prepared a prototype that allowed us to determine how well Litho fit in with the requirements of our project.

The SDK setup was straightforward and implementing the prototype took relatively little time. From the video, you can see that Litho requires minimal initial calibration. The interaction is based on a precise virtual laser pointer with a fixed origin. Litho offers 3 DOF readings, meaning you can detect how the hand is oriented in space, but not where it’s located. The quality of the interactions doesn’t depend on the lighting conditions.

After evaluation, we decided not to use Litho for our entertainment experience, mainly because it didn’t offer hand position tracking, which we considered crucial for achieving a high fun factor.

The significance of this argument came from the fact that adding any kind of external controller made the logistics more complex so the provided entertainment value needed to be worth that extra cost.

Our app would be preinstalled on mobile devices, which would then be rented on the premises for the users to enjoy for a specified time.

Using any kind of controller required us to pair it with the devices, ensure it remained charged, and account for it when picking up the equipment once a visitor finished using the app.

Even though we decided not to use Litho in our project, I think it could work well in any kind of mobile AR application where the precision of the interaction is the key and where the users interact with the app regularly.

FinchDash / FinchShift

FinchDash / FinchShift are AR/VR motion controllers. A Unity SDK is available. Of the two, only FinchDash is described as supporting mobile platforms, which is a bit unfortunate as it only allows 3 DOF tracking.

Also, the controller’s rather unremarkable looks don’t fit well with themed experiences.

FinchShift, FinchDash's sibling device, uses an additional piece of equipment in the form of an armband to offer full 6 DOF input. It’s also more visually appealing in my opinion.

ManoMotion

ManoMotion is a camera-based mobile hand tracking solution that offers a Unity SDK. It’s easy to set up and doesn’t require additional calibration. It can scale depending on the needs from 2D tracking through 3D tracking of the hand position and finally to a full-blown skeletal tracking with gesture recognition.

As with any camera-based solution though, it has some technical limitations that need to be considered.

The main one is the effective control area that is only as large as the camera’s field of view. Since we’re discussing a mobile use case here, it’s even more significant as the device in our project would be held in one hand and you can extend the other one so far before it becomes awkward.

Another disadvantage is the reliance on computer vision algorithms, which causes the accuracy to be inconsistent across different lighting conditions. Especially colored light can degrade the experience quite a bit.

That said, we had the chance to work with ManoMotion’s support on our challenging use case (dim colored lighting). It turns out that ManoMotion can adjust their algorithm if the target conditions are known in advance. In our case, it allowed achieving a similar level of accuracy in the challenging lighting as in an optimal one, which was very impressive.

Google MediaPipe Hand Tracking

Google MediaPipe is an open-source camera-based hand tracking solution, similar to ManoMotion, and as such it shares the same limitations. In terms of platforms, it supports Android and iOS. But at the time of our research, it didn’t offer an officially supported Unity SDK.

ClayControl

ClayControl is another option in the category of camera-based hand tracking solutions. It seems to cover a wide range of platforms, including mobile, and has Unity on the list of compatible software platforms.

ClatControl’s website mentions low latency as one of the key selling points, which is interesting considering that solutions based on cameras and computer vision usually involve some degree of an input lag. It seems there is no SDK openly available for it, so we didn’t have a chance to evaluate it.

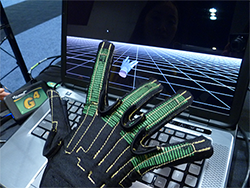

Polhemus

Polhemus is a very promising wearable camera-less hand tracking solution. It’s based on 6 DOF sensors, which don’t require a field of view and can provide continuous tracking even in complete darkness.

At the time of our research, however, it was PC-only. On the website, you can find information about VR, but unfortunately no AR support yet.

Xsens DOT

Although Xsens DOT is a motion-tracking device and not an actual controller, it could be used as one with some additional work. It does offer 3 DOF support so the orientation data is accurate but the position is only estimated.

It’s smaller than Litho, which itself is quite small. At the time of writing, I wouldn't consider it practical for typical AR interactions. It might be worth considering for more specific motion tracking needs.

FingerTrak

FingerTrak is another promising wearable, this time in the form of a bracelet. It allows continuous hand pose tracking using relatively small thermal cameras attached to a wristband.

On its own FingerTrak allows detecting gestures without the field of view limitation, which affects the other camera-based solutions. It doesn’t look like a finished product yet, but it seems to be an interesting approach that could turn out well in the future.

Leap Motion Controller

Leap Motion Controller uses a relatively small sensor with cameras and a skeletal tracking algorithm to accurately detect the user’s hands. Unfortunately, at the time of writing, it doesn’t support mobile yet.

Apple AirPods Pro

Wait, what? As surprising as it sounds, someone actually seems to have tried using AirPods Pro for 6 DOF tracking! This is more of a curio, but if the video is actually legitimate, AirPods open up some unexpected possibilities.

So Is There a Non-Touch Screen Interaction Method Production-Ready for Mobile AR?

Some of the solutions described in the article — e.g., Litho and FinchDash — can already be used as a convenient input method for mobile AR. Although they’re external devices that keep the user’s hand occupied, they seem to work as an accurate input method that can be very intuitive after some initial getting used period.

Camera-based hand tracking solutions like ManoMotion, Google MediaPipe, and ClayControl bring this great promise of natural, hands-free mobile AR interactions. To some extent, they already deliver on it and can be used for controlling actual apps.

But the limited tracking area and dependency on lighting conditions make them, based on our tests, somewhat unreliable and, at times, cumbersome to use.

If you consider using hand tracking as a primary input method for your app, be prepared to spend the extra time designing the UX. Test early and often, taking into account various lighting conditions — you want to make sure the limitations won’t get in your users’ way.

Wearable motion tracking solutions like Polhemus and Xsens DOT aren’t designed for mobile interactions, but if at some point they evolve in that direction, they can become a viable alternative to the camera-based hand tracking approach, addressing its main limitations.

Mobile AR input methods, just like the AR technologies themselves, are still evolving. We expect it might take a couple of years before a solution emerges that will replace the touchscreen as the default interface.

Related articles

Supporting companies in becoming category leaders. We deliver full-cycle solutions for businesses of all sizes.